(ENG) DeepSeek-R1 Paper Review

Table of Contents

- 1. Introduction

- 2. Approach

- 3. Distillation

- 4. Experiment

- 5. Discussion (Unsuccessful Attepts)

- 6. 서울대학교 자연어처리 랩실 랩미팅 내용

1. Introduction

Key Features : RL via GRPO algorithm, "aha" moment, Distillation, limitations of the MCTS and PRM in training R1

DeepSeek-R1-Zero and is the first generation reasoning model by DeepSeek AI trained via reinforcement learning without Supervised fine-tuning. Its primary limitations was that it had poor readability and language mixing

2. Approach

2.1 Reinforcement Learning Algorithm on the Base Model (R-1 Zero)

GRPO

To reduce the training cost of reinforcement learning (RL), a critic model of equivalent size to the policy model was omitted. Instead, Grouped Reinforcement Policy Optimization (GRPO) was used, which estimates the baseline using group scores.

GRPO samples a group of outputs (\({𝑜_1, 𝑜_2, · · · , 𝑜_𝐺 }\)) from the old policy \((\pi \theta_{\text{old}}\))and then optimizes the policy model \(\pi \theta\) by maximizing the following objective:

\[\mathcal{J}_{GRPO}(\theta) = \mathbb{E} \left[ q \sim p(Q), \{o_i\}_{i=1}^{G} \sim \pi_{\theta_{old}}(O | q) \right]\] \[\frac{1}{G} \sum_{i=1}^{G} \left( \min \left( \frac{\pi_{\theta}(o_i | q)}{\pi_{\theta_{old}}(o_i | q)} A_i, \operatorname{clip} \left( \frac{\pi_{\theta}(o_i | q)}{\pi_{\theta_{old}}(o_i | q)}, 1 - \epsilon, 1 + \epsilon \right) A_i \right) - \beta \mathbb{D}_{KL} (\pi_{\theta} \| \pi_{ref}) \right)\] \[\mathbb{D}_{KL} (\pi_{\theta} \| \pi_{ref}) = \frac{\pi_{ref}(o_i | q)}{\pi_{\theta}(o_i | q)} - \log \frac{\pi_{ref}(o_i | q)}{\pi_{\theta}(o_i | q)} - 1\]where,

- \(\epsilon\) & \(\beta\) are hyperparameters

- \(A_i\) is the advantage

The advantage is computed using a group of rewards (\({𝑟_1, 𝑟_2, . . . , 𝑟_G }\)) corresponding to the outputs within each group.

\[A_i = \frac{r_i - \text{mean}(\{r_1, r_2, \dots, r_G\})}{\text{std}(\{r_1, r_2, \dots, r_G\})}\]Rule Based Reward System

The reward decides the optimization direction of the RL. A rule-based reward system that mainly consists of two types of rewards is adopted.

- Accuracy Rewards

- TL;DR : Evaluates whether response is correct

- i.e. : For LeetCode problems, a compiler can be used to generate feedback

- Format Rewards

- TL;DR : Is the

<think>tag appropriatelly annotated - Forward-thinking model that enforces the model to place its thinking process between

<think>tags

- TL;DR : Is the

Training Template

This is the training template for DeepSeek-R1-Zero. The prompt portion is replaced with the question for the actual training.

A conversation between User and Assistant. The user asks a question, and the Assistant solves it. The assistant first thinks about the reasoning process in the mind and then provides the user with the answer. The reasoning process and answer are enclosed within <think> </think> and <answer> </answer> tags, respectively, i.e., <think> reasoning process here </think>

<answer> answer here </answer>. User: prompt. Assistant:

Performance, Self-evolution Process and Aha Moment

The following figure depicts the performance trajectory of the R-1 Zero model on the AIME 2024 Benchmark throught the RL training process.

The following is a brief explanation of each benchmark:

-

AIME 2024: The American Invitational Mathematics Examination (AIME) is a 15-question, 3-hour test focusing on advanced high school mathematics, including algebra, geometry, and number theory. It serves as a qualifier for the United States Mathematical Olympiad. link

-

MATH-500: This dataset comprises 500 problems from the MATH benchmark, covering various topics such as algebra, calculus, and probability. It is designed to evaluate mathematical reasoning and problem-solving abilities of AI models. link

-

GPQA Diamond: The Graduate-Level Google-Proof Q&A Benchmark (GPQA) is a challenging dataset of 448 multiple-choice questions in biology, physics, and chemistry, crafted by domain experts. The “Diamond” subset represents the most difficult questions, assessing the advanced reasoning capabilities of AI models. link

-

LiveCodeBench: LiveCodeBench is a benchmark designed for evaluating large language models’ coding capabilities. It continuously collects new problems from platforms like LeetCode, AtCoder, and CodeForces, and assesses various aspects such as code generation, execution, and self-repair. link

-

CodeForces: CodeForces is a competitive programming platform that hosts regular contests where participants solve algorithmic problems under time constraints. It serves as a benchmark for evaluating coding and problem-solving skills, with a rating system to rank participants. link

Aha moment of DeepSeek-R1-Zero

The significance of the “aha moment” is that through RL, the model autonomously develops advanced problem-solving strategies, given the right incentives. The model learns to rethink using an anthropomorphic tone(aha!).

2.1 Reinforcement Learning with Cold Start

Two questions arise by the results of DeepSeek-R1-Zero.

- Can reasoning performance be further improved or can convergence be accelerated by incorporating a small amount of high-quality data as a cold start?

- How can we train a user-friendly model that not only produces clear and coherent Chains of Thought (CoT) but also demonstrates strong general capabilities

The pipeline of DeepSeek-R1 attempts to address these questions. The pipeline consists of four stages, outlined as follows(stages 1~4).

Stage 1: Cold Start

To prevent instability in the early phases of RL training, DeepSeek-R1 incorporates a cold start phase by fine-tuning the base model with a small dataset of high-quality long CoT data. This dataset is constructed through multiple approaches, including:

- Few-shot prompting with long CoT examples.

- Direct prompting for detailed answers with reflection and verification.

- Extracting readable outputs from DeepSeek-R1-Zero.

- Human post-processing to refine results.

By introducing cold-start data, DeepSeek-R1 achieves improved readability

Stage 2: Reasoning-oriented Reinforcement Learning

After fine-tuning DeepSeek-V3-Base on cold-start data, a large-scale reinforcement learning (RL) process similar to DeepSeek-R1-Zero was applied. This phase enhances the model’s reasoning abilities, particularly in coding, mathematics, science, and logical reasoning—domains with well-defined problems and clear solutions.

One challenge observed during training is language mixing in CoT responses, especially when RL prompts involve multiple languages. To address a language consistency reward was implemented, which measures the proportion of target-language words in the response. While ablation studies indicated a minor drop in performance, this alignment improved readability and human preference. The final reward combines reasoning accuracy and language consistency, and RL training continues until convergence.

Stage 3: Rejection Sampling and Supervised Fine-Tuning

Once reasoning-oriented RL converges, the checkpoint is used to generate Supervised Fine-Tuning (SFT) data for the next training phase. Unlike the cold-start data, which primarily targets reasoning, this stage incorporates diverse data types to improve DeepSeek-R1’s general-purpose capabilities.

-

Reasoning Data: Prompts are curated, and multiple reasoning trajectories are generated using rejection sampling. Unlike earlier phases that relied solely on rule-based rewards, this stage integrates generative reward models by comparing model outputs with ground-truth data using DeepSeek-V3. Additionally, unreadable outputs with mixed languages, excessive length, or unnecessary code blocks are filtered out. Ultimately, this process yields 600k high-quality reasoning samples.

-

Non-Reasoning Data: To enhance tasks such as writing, factual QA, self-cognition, and translation, 200k additional training samples are incorporated from DeepSeek-V3’s SFT dataset. For complex queries, an intermediate CoT is generated before answering, while for simple queries (e.g., “hello”), CoT is omitted.

Overall, DeepSeek-V3-Base is fine-tuned for two epochs using an 800k-sample dataset.

Stage 4: Reinforcement Learning for All Scenarios

To further align the model with human preferences, a secondary RL stage is introduced, refining both reasoning capabilities and alignment with user expectations. This phase integrates reward signals and diverse prompt distributions:

- Reasoning Data: Rule-based rewards, as in DeepSeek-R1-Zero, are applied to reinforce skills in mathematics, coding, and logical reasoning.

-

General Data: A reward model evaluates helpfulness and harmlessness in open-ended tasks, following the DeepSeek-V3 preference pipeline.

- Helpfulness: The reward model assesses only the final summary, ensuring it is useful and relevant while preserving reasoning integrity.

- Harmlessness: The entire response, including both the reasoning process and summary, is reviewed to mitigate potential risks, biases, or harmful content.

By combining reinforcement learning, reward models, and diverse data sources, DeepSeek-R1 is trained to excel in reasoning while maintaining strong alignment with human preferences.

3. Distillation

To equip smaller models with reasoning capabilities similar to DeepSeek-R1, open-source models like Qwen and Llama was fine tuned using the 800k curated samples from stage 3 of the pipeline.

The base models used include:

- Qwen2.5: Math-1.5B, Math-7B, 14B, 32B

- Llama: 3.1-8B, 3.3-70B-Instruct (chosen for its superior reasoning ability over Llama-3.1)

Unlike DeepSeek-R1, distilled models undergo only SFT

4. Experiment

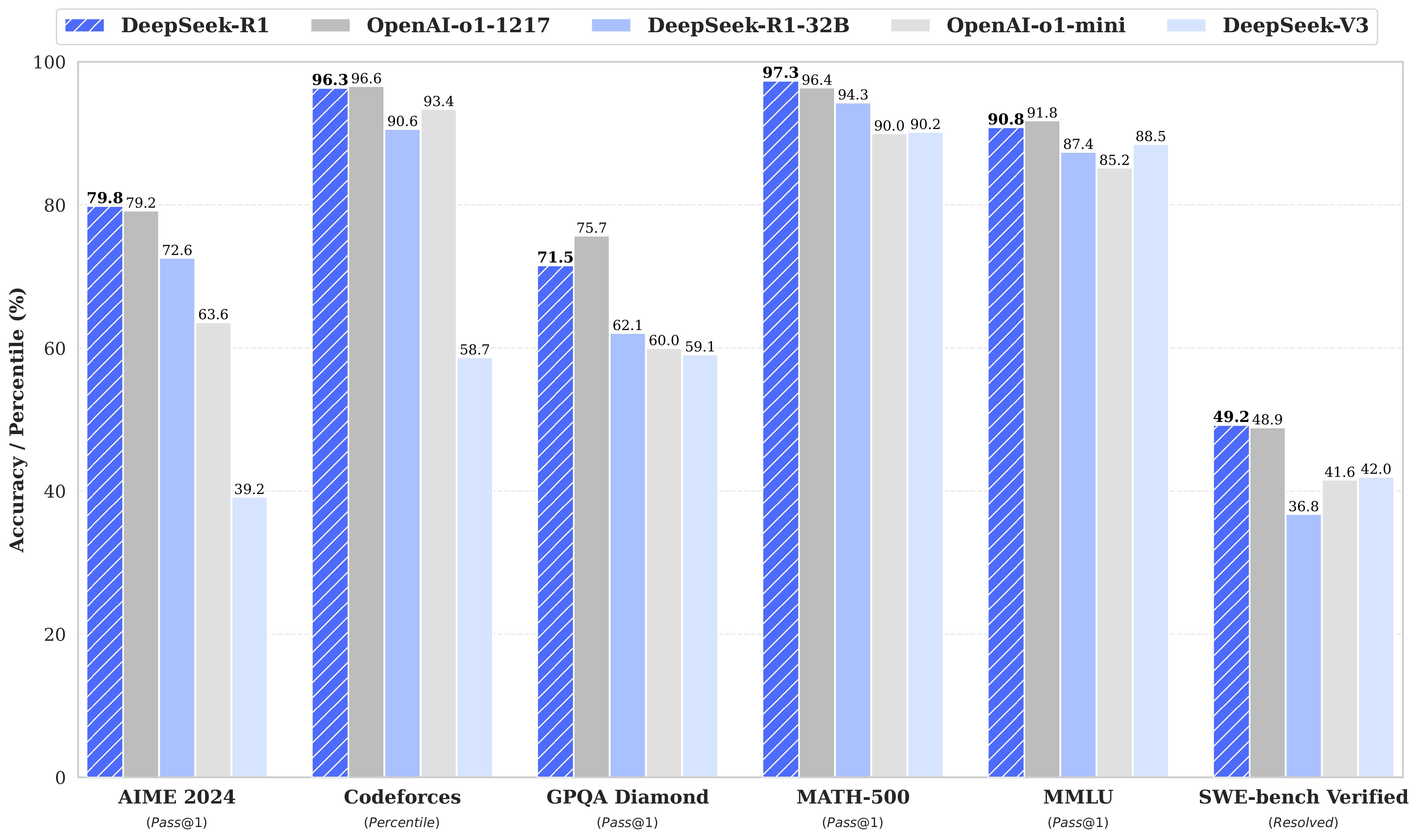

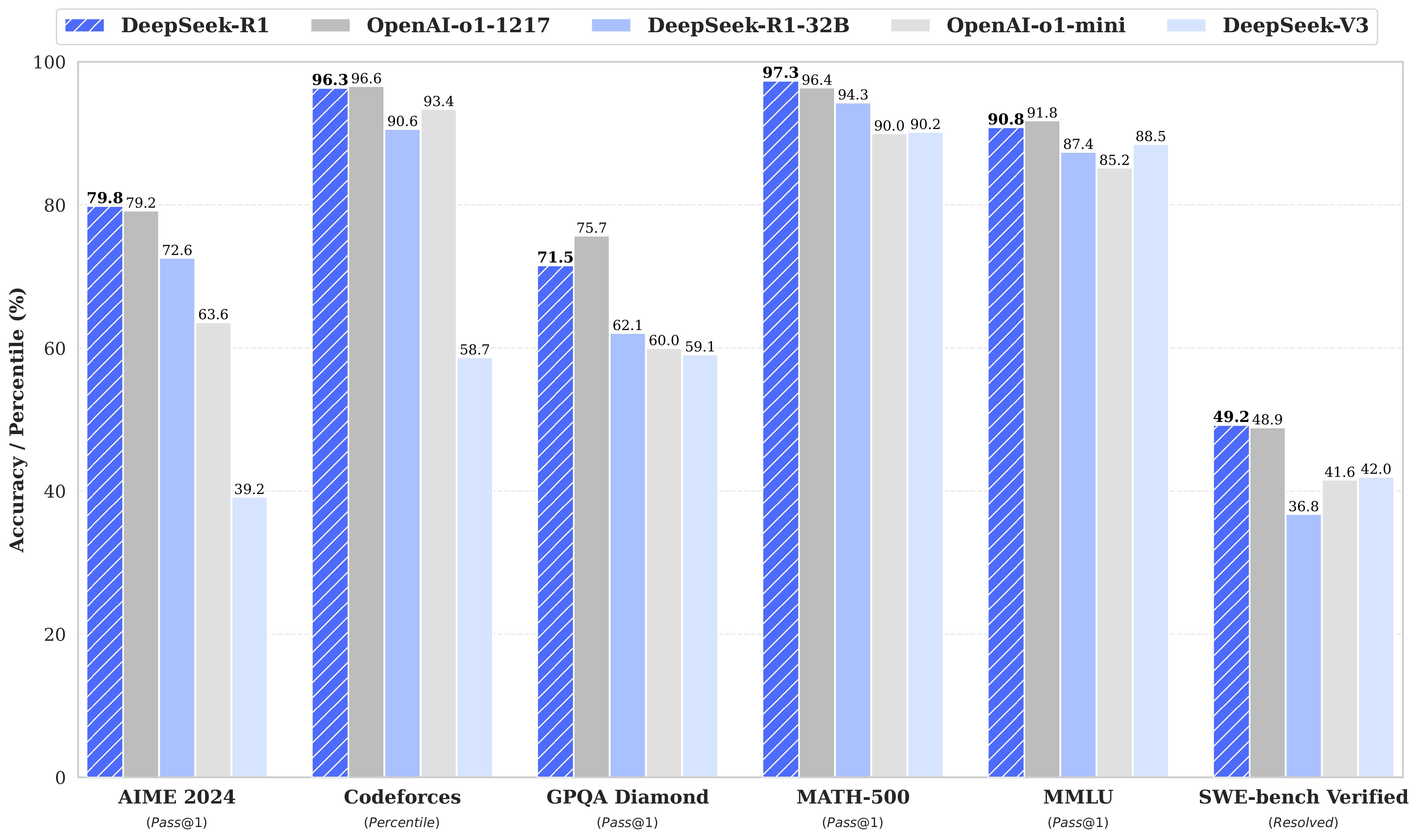

The “flagship model” DeepSeek-R1 was evaluated using a range of benchmarks covering math, coding, factual knowledge, and reasoning, including:

- Math & Reasoning: AIME 2024, MATH-500, GPQA Diamond, CNMO 2024

- Coding: LiveCodeBench, Codeforces, SWE-Bench Verified, Aider 1

- General Knowledge & QA: MMLU, MMLU-Redux, MMLU-Pro, C-Eval, CMMLU, SimpleQA, C-SimpleQA, IFEval, FRAMES

- LLM-based Open-Ended Generation: AlpacaEval 2.0, Arena-Hard

For distilled R1 models, the results was reported on the AIME 2024, MATH-500, GPQA Diamond, Codeforces, and LiveCodeBench.

Evaluation Prompts & Setup

- Benchmarks use standard prompt formats (e.g., simple-evals for MMLU, Zero-Eval for MMLU-Redux).

- Few-shot CoT prompts are modified to zero-shot, as CoT in few-shot settings may hurt DeepSeek-R1 performance.

- LiveCodeBench uses CoT-based evaluation on data collected between Aug 2024 – Jan 2025.

- Codeforces evaluation includes 10 Div.2 contests with expert-crafted test cases.

For long-output reasoning models, we use pass@k evaluation to reduce variability, with pass@1 calculated using temperature 0.6 and top-p 0.95. AIME 2024 additionally reports cons@64 (majority vote results).

Baselines

DeepSeek-R1 is compared against strong baselines, including DeepSeek-V3, Claude-Sonnet-3.5-1022, GPT-4o-0513, OpenAI-o1-mini, OpenAI-o1-1217, and for distilled models, QwQ-32B-Preview.

Generation Constraints

- Maximum token length: 32,768

- Pass@k evaluation with 4 to 64 samples per query to ensure stable performance estimates.

5. Discussion (Unsuccessful Attepts)

During the development of DeepSeek-R1, Process Reward Models (PRM) and Monte Carlo Tree Search (MCTS) were unsuccessful in training.

Process Reward Model (PRM)

PRM aims to guide the model toward better reasoning by rewarding correct intermediate steps. However, it has three major limitations:

- Defining fine-grained

Fine-Grained Approach : 작업을 큰 단위 안에서도 세분화된 작업 프로세스를 만들어서 실행하는 방식 reasoning steps is difficult. - Determining the correctness of an intermediate step is challenging, as automated annotations are unreliable and manual annotation does not scale.

- PRM introduces reward hacking, requiring frequent retraining and additional resources.

While PRM can be effective in reranking responses or guided searches, its computational overhead outweighs its benefits in large-scale reinforcement learning.

Monte Carlo Tree Search (MCTS)

Inspired by AlphaGo and AlphaZero, MCTS was tested as a method to enhance reasoning by systematically exploring solution paths. The process involved tagging reasoning steps, using a value model to guide searches, and iteratively refining the training process. However, two major challenges emerged:

- The search space for token generation is exponentially larger than structured games like chess, making it difficult to scale effectively.

- The value model plays a crucial role in search quality, but training a reliable fine-grained value model is inherently difficult.

While MCTS can improve inference when used with a pre-trained value model, iteratively boosting model performance through self-search remains a challenge.

6. 서울대학교 자연어처리 랩실 랩미팅 내용

페이퍼 리뷰 요약

- 딥시크 모델은 중국어와 영어로 트레이닝이 되었다.

- 한국어 성능은?

- Inference Test : llama 8b, qwen2의 경우 한국어 성능이 떨어짐 (토큰 단순반복, 등의 이슈 발생)

- 중의적인지 알려줘 등, 한국어의 유형을 판별하는 프롬프트에서도 잘못된 approach를 보여줌

- 답변을 영어로 출력하는 경우도 있다.

Training Pipeline of DeepSeek-R1

- initial SFT w/ high quality examples → collected from DeepSeek-R1 Zero

- RL focusing on reasoning tasks (coding, math, …)

- collection of new training data through rejection sampling & SFT correct and readable samples in more general domains

- final RL across all task types: Rule-based reward or LLM feedback

SFT 없이 강화학습만 해도 추론능력을 향상시킬 수 있다는 점이 이번 페이퍼의 key finding.

limitations

- general capability

- SE tasks

- Language Mixing in multilingual context